Dear Futurists,

What’s the most important book of the decade?

Read on, to discover which book book is described that way by Max Tegmark, the President of the Future of Life Institute – and himself the author of two of the books that I particularly admire (Our Mathematical Universe and Life 3.0).

Read on, moreover, to find out about an unofficial launch event happening in London for the book in question, as well as many other events or projects with a futurist angle.

In short, expect a number of bold claims, with the common theme of building and securing a better future.

1.) Unofficial Book Launch Party: IABIED

If you’ve not yet come across the acronym IABIED, let me spell it out for you:

If Anyone Builds It, Everyone Dies.

That, in just six words, is the case against building Superintelligent AI, whilst humanity is still so poorly prepared to control what will be unleashed.

That six-word phrase is the title of the book by Eliezer Yudkowsky and Nate Soares, both of MIRI (the Machine Intelligence Research Institute), which becomes available on the 22nd of September.

There’s a commendation by Stephen Fry on the cover of the book: “A loud trumpet call to humanity to awaken us as we sleepwalk into disaster”.

Fry gives a longer endorsement inside the book itself:

“The most important book I’ve read for years: I want to bring it to every political and corporate leader in the world and stand over them until they’ve read it. Yudkowsky and Soares, who have studied AI and its possible trajectories for decades, sound a loud trumpet call to humanity to awaken us as we sleepwalk into disaster. Their brilliant gift for analogy, metaphor and parable clarifies for the general reader the tangled complexities of AI engineering, cognition and neuroscience better than any book on the subject I’ve ever read, and I’ve waded through scores of them. We really must rub our eyes and wake the fuck up!“

Emmett Shear, former interim CEO of OpenAI, puts it like this: “Soares and Yudkowsky lay out, in plain and easy-to-follow terms, why our current path toward ever-more-powerful AIs is extremely dangerous.”

Musician and critic Grimes adds her reflections: “You will feel actual emotions when you read this book. We are currently living in the last period of history where we are the dominant species. Humans are lucky to have Soares and Yudkowsky in our corner, reminding us not to waste the brief window of time that we have to make decisions about our future in light of this fact.”

This is from renowned cybersecurity expert Bruce Schneier: “A sober but highly readable book on the very real risks of AI. Both sceptics and believers need to understand the authors’ arguments, and work to ensure that our AI future is more beneficial than harmful.”

George Church, Professor of Genetics at Harvard University, provides this recommendation: “This book offers brilliant insights into history’s most consequential standoff between technological utopia and dystopia, and shows how we can and should prevent superhuman AI from killing us all. Yudkowsky and Soares’s memorable storytelling about past disaster precedents … highlights why top thinkers so often don’t see the catastrophes they create.”

And from Ben Bernanke, Nobel laureate and former Chairman of the Federal Reserve: “A clearly written and compelling account of the existential risks that highly advanced AI could pose to humanity. Recommended.”

That’s just a sample of the advance praise you can find on the book’s website or on online bookseller sites.

These comments include the commendation I mentioned above, from Max Tegmark: “The most important book of the decade … This captivating page-turner, from two of today’s clearest thinkers, reveals that the competition to build smarter-than-human machines isn’t an arms race but a suicide race, fuelled by wishful thinking.”

Want to find out more?

Friends of London Futurists at PauseAI have organised an unofficial book launch at Newspeak House on Monday 22nd September. For more information about that event, and to register to attend, visit Luma.

I look forward to seeing many of you there.

If you can’t wait until then, there’s an opportunity to join a two-hour long conversation between the two authors later today, Thursday 4th September, from 8pm UK time. To receive an invite for that event, you’ll need to provide evidence that you have pre-ordered the book. See here.

Another way to warm up for the book is to listen to this recording of a London Futurists Podcast conversation featuring Nate Soares.

I attended an earlier online discussion about the book, on 10th August, between Tim Urban and Nate Soares. At the end, I tweeted the following:

“To simplify (but not too much) the conclusion of today’s Q&A session with Tim Urban and Nate Soares: If Everyone Reads This Book, No-One Dies.”

(I did warn you that there would be some bold claims in this newsletter…)

2.) Invitation to Collaborate: Existential Risk InfoHub

We live in a time of seeming exponential explosion, not only of AI capability, but also of information and opinions:

- About the future capability of AI

- About how advanced AI might interact, either constructively or destructively, with the full spectrum of risks of catastrophe facing humanity.

With so much information and, yes, misinformation, it’s easy to become overwhelmed.

What we urgently need isn’t yet more information but information that is better curated, better organised, and more accessible.

That goal been a driving ambition of London Futurists throughout the seventeen years that I’ve been organising its events and newsletters.

In that light, I am pleased to highlight the EXTRA InfoHub produced by the EXTRA working group of the WAAS (World Academy of Art and Science).

Here, EXTRA stands for EXistential Threats and Risks to All.

On Saturday 20th September, a London Futurists Webinar will take place featuring members of the EXTRA Working Group. Our guest will be discussing:

- Their vision for the EXTRA InfoHub

- How organisations and individuals can become involved

- Preliminary findings from a study of what the Working Group describes as “20 Notable Reports on Existential Threats and Risks”.

Be aware that this will be starting FOUR HOURS EARLIER in the day than most of our other webinars. That’s because one of the panellists is located in Melbourne Australia.

For more details, and to register to take part, click here.

3.) The future of global AI governance

Looking further ahead to Saturday 4th October – this time at our usual start time of 4pm UK time:

On that occasion, we’ll be joined online by panellists who have all been involved in various ways with international behind-the-scenes conversations about options for the future of global AI governance:

- Sean O hEigeartaigh, Director, AI: Futures and Responsibility Programme, University of Cambridge

- Kayla Blomquist, Director, Oxford China Policy Lab

- Dan Faggella, CEO and Head of Research, Emerj Artificial Intelligence Research

- Duncan Cass-Beggs, Executive Director, Global AI Risks Initiative

- Robert Whitfield, Executive Director, GAIGANow.

As with all our webinars, there will be plenty of time for audience questions and feedback.

For more details, and to register to attend, click here.

4.) Foresight Sparks – London Futurists in the Pub

After a break of several months over the summer, London Futurists in the Pub events are restarting on Thursday 11th September – in our by-now usual venue of Ye Olde Cock Tavern in Fleet Street.

On this occasion, we’re keeping the subject broad:

Which insights about possible near-term future scenarios deserve greater attention?

What are the tools, the sources of energy, the breakthrough technologies, the new operating models, the emerging campaigns, the creative thought patterns, the silent dangers, or the hidden stores of treasure, that people should be talking about more?

There will be short speaking opportunities to any of the attendees who believe they have important sparks of foresight that are worth sharing and debating.

To keep the meeting lively, each speaker will be restricted to 7 minutes maximum. We’ll hear from a number of different speaker before having several wider conversations.

If you want to prepare your provocation in advance, please get in touch with the organisers to reserve your speaking slot. We’ll give preference to people with something genuinely new to say.

For more details, and to register to take part, click here.

5.) Biostasis in the UK

Here’s another date for your diary: Saturday and Sunday 27th and 28th September.

Friends of London Futurists at Biostasis Technologies and Tomorrow Bio are co-organizing a weekend of cryonics activities focused on UK members and cryonics response capabilities.

Featured speakers on Saturday will include Max More, Aschwin de Wolf, and Emil Kendziorra. On Sunday, interested members and medical professionals can participate in a cryonics training and see the new Cryonics UK response vehicle.

This will be taking place at a venue in London E2.

To be kept up-to-date with the plans for this event, click here.

Preparing for biostasis is sometimes called “Plan B”, in contrast to the “Plan A” activities which seek to accelerate biological rejuvenation ahead of our legal deaths. These latter activities are the subject of the remaining items in this newsletter. But at the Biostasis UK event, you’ll likely hear calls to switch the order of our plans – that preparing for biostasis should become “Plan A” instead.

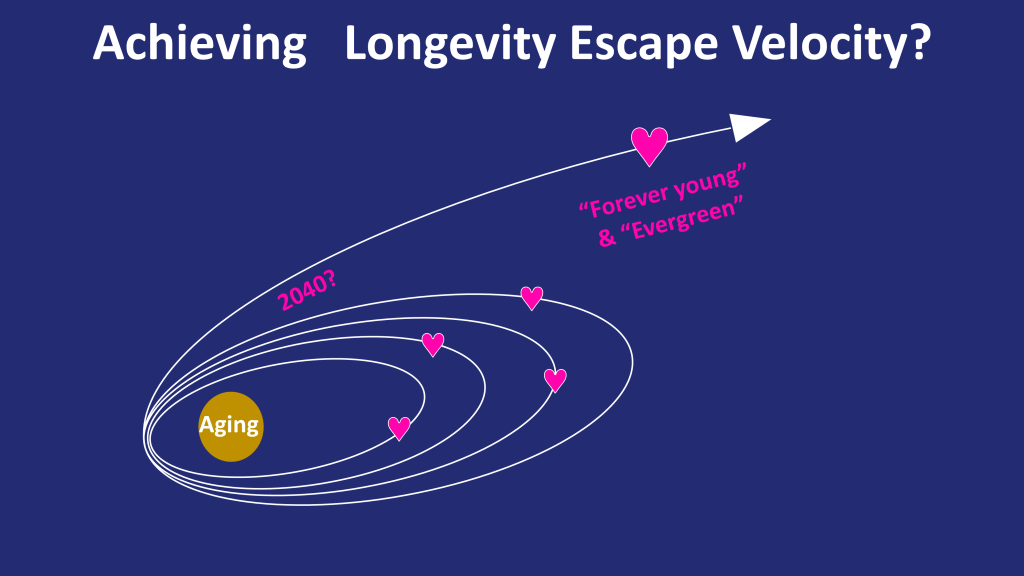

6.) Accelerating toward Longevity Escape Velocity

Next Tuesday, 9th September, I’ll be one of a number of speakers at the online VSIM:25 event in the first of a number of sessions dedicated to “Longevity and Transhumanism”.

You can find the schedule online here, as well as the process to sign up to attend.

Note that the times on that site are in Eastern European Time, that is, two hours ahead of UK time.

My topic that morning will be “Catalysing a phase transition in the speed of progress toward Longevity Escape Velocity”.

I’ve covered some of these ideas before, for example in this blogpost from May 2024, but my ideas have moved on from then in various ways.

7.) TransVision Madrid

In case you’re unable to join the VSIM:25 event online, you’ll have another chance to hear me speak on a similar theme at TransVision Madrid, which is taking place on 1st and 2nd October.

If you check the TransVision Madrid website, you’ll see a host of eminent speakers are lined up to take part. I look forward to seeing many of you there!

8.) Aubrai – pioneering BioAgent and ecosystem governance token

One reason it’s been so long since the previous London Futurists Newsletter is that I have been rather busy in my role as Executive Director of LEVF (Longevity Escape Velocity Foundation), supporting the development and deployment of Aubrai.

No, that’s no a misspelling. Aubrai an online agent trained on the insights and research findings of Aubrey de Grey, and integrated with decentralised science funding tools.

Aubrai is designed to support LEVF’s groundbreaking RMR2 project. If successful, Aubrai could provide aging’s “AlphaFold moment”.

At present, you can interact with Aubrai on X (formerly known as Twitter).

Tomorrow, Friday 5th September, staring at 5pm UK time, there’s going to be an online X Space featuring representatives of LEVF along with LEVF’s partners at VitaDAO and Bio Protocol. We’ll be reviewing some of the first hypotheses arising from online interactions with Aubrai.

To set a reminder about this Space, click here.

Be bold!

// David W. Wood

Chair, London Futurists

As I have always maintained and said.

“Just because we can, doesn’t mean that we should”

Kind Regards,

Graham Mcnally Agricultural Production Manager, NGIP Group of Companies Mobile: +675 7178 2993 Email: gmcnally@agmark.com.pg Trunk Line: 7411 1424 | 982 9055 Ext: 148

NGIP Haus, Level 1, Talina, Kokopo P.O.Box 1921, Kokopo, ENBP Papua New Guinea http://www.agmark.com.p http://www.agmark.com.pgg