Dear Futurists,

The topic of “ethical AI” arises in many discussions these days. I confess that I am often frustrated by these discussions. They can seem a bit abstract or other-worldly.

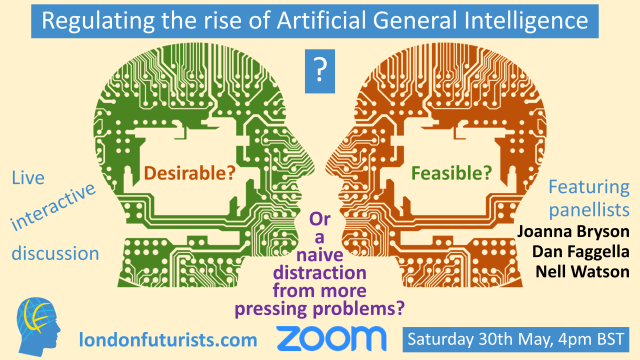

So let me offer my own views on “ethical AI”, and on the closely related topic of “regulating AI”. These thoughts will provide some context for the London Futurists event this Saturday, Regulating the rise of Artificial General Intelligence.

To be clear, few topics are more important than that of ethical AI.

1.) Ethics and regulations

There are some things which we have the ability to do, and which we might like to do (on occasion at least), but which we decide for various reasons not to do.

These things are the subject of ethics. They are things we choose to avoid or decline, despite them being within our power, and despite any desires we might harbour for them.

Perhaps we make such choices out of a conviction that a better future is more likely to arise, as a result of our avoidance, sacrifice, or turning away. Perhaps we simply feel that the actions in question are wrong at some fundamental level.

Sometimes society converts an ethical guideline into a legal regulation. For example, murder is a crime, and courts of law will generally send convicted murderers to prison. On other occasions, ethical views find expression in feelings rather than the laws of the land: we dislike people who are self-obsessed, or who cheat on their life partners, but (in most countries) there is no legal sanction against such actions.

Consider AIs that can deceive people. For example, AIs can help create fake videos, by using a technique called generative adversarial networks (GANs). As stated in the headline of a recent Forbes article, “Deepfakes are going to wreak havoc on society: we are not prepared”. Should we therefore ban such a use of AIs?

What about AIs that improve the speed at which weapons can initiate actions against perceived incoming enemy soldiers – such as the SGR-A1 sentry gun in the Korean demilitarised zone?

One more example: AIs could make software malware more destructive, by cleverly evading security countermeasures. Such malware could bring rich financial rewards to the people who deploy it. Should the technology it exploits be restricted?

Suggestions that this kind of AI should be restricted often result in one of the following responses:

- Any such AI technology has potential positive uses as well as negative ones. If we ban the technology in question, we’ll be slowing down our progress towards other beneficial outcomes to which that AI could contribute

- Any bans on such software will be ignored by the more ruthless members of the global community. If we constrain our own ability to develop such solutions, we’ll find ourselves at the mercy of those who evade the constraints

- Since AI is changing so quickly, it’s premature to lock in any system of restrictions. Legal regulations will likely fossilise and become outdated.

But as I see it, such responses are no reason to give up on searching for agreements on ethical and/or legal constraints on AI. Instead, they’re a reason to redouble our efforts on clarifying the pros and cons of different approaches – and to find innovative new frameworks in which the benefits strongly outweigh the drawbacks.

That’s the reason I am planning to hold, not just one, but a series of events in the weeks and months ahead, on the topic of regulating emerging new technologies.

That series starts this Saturday.

2.) This Saturday’s event

Three researchers who have thought long and hard about matters of AI and ethics will be joining me in discussion this Saturday:

- Joanna Bryson, Professor of Ethics and Technology at the Hertie School, Berlin

- Dan Fagella, CEO and Head of Research, Emerj Artificial Intelligence Research

- Nell Watson, tech ethicist, machine learning researcher, and social reformer.

The questions tabled for discussion are as follows:

- As research around the world proceeds to improve the power, the scope, and the generality of AI systems, should developers adopt regulatory frameworks to help steer progress?

- What are the main threats that such regulations should be guarding against?

- In the midst of an intense international race to obtain better AI, are such frameworks doomed to be ineffective?

- Might such frameworks do more harm than good, hindering valuable innovation?

- Are there good examples of precedents, from other fields of technology, of international agreements proving beneficial?

- Or is discussion of frameworks for the governance of AGI (Artificial General Intelligence) a distraction from more pressing issues, given the potential long time scales ahead before AGI becomes a realistic prospect?

Depending on what each panellist says, and depending on the questions raised in real-time by audience members, the discussion could well move into adjacent fields too.

The event will run from 4pm to 5.30pm BST. For more details, click here.

You’ll find that you’ll need to visit this Zoom page to actually register to take part. Just in case the Zoom registration takes some time, I advise you not to leave that to the last minute.

3.) The start of an answer: nine principles and a dilemma

Here are nine principles that I personally believe need to be present in any viable system of AI regulations:

- Accountability: When a mishap arises from an AI solution, the company that provided the solution should not be allowed to shrug their shoulders of any responsibility for the mishap, for example by hiding behind get-out clauses in one-sided license agreements

- Data transparency: Users of AI systems should be made aware of potential limits and biases in the data sets used to train these systems

- Algorithm transparency: Users of AI systems should be made aware of any latent weaknesses in the design characteristics of the model, including any potential for the system to reach unsound conclusions in particular circumstances

- Explainability: Preference should be given to AIs whose outputs can be reviewed and explained, rather than users simply having to accept a prior track record of apparent success

- No hidden trade-offs: Trade-offs between different measures of fairness ought to be made explicitly, rather than implicitly (bear in mind the counter-intuitive point that different ideas on fairness are often impossible to satisfy at the same time)

- Anti-fragility: Users should be made aware of any potential disaster modes when solutions can tip over from being beneficial to having truly adverse effects

- Verifiability: Preference should be given to AI systems where it is possible to ascertain that the system will behave as specified, and where it is possible to ascertain whether the specification has significant holes in it

- Security: Users should be made aware of any risks of the AI being misled (e.g. by adversarial data) or having some of its safety measures being edited out or otherwise circumvented

- Auditability: Preference should be given to AIs whose operation can be reliably monitored, to ensure they are behaving according to prior agreements.

Of course, in any rush to market – in any rush to beat competitors with a novel solution – there will be many temptations to sidestep these points. People might tell themselves that the safety (or the ethics, or the fairness, etc) can be engineered in afterwards. As the AI systems become increasingly powerful, that kind of thinking will become increasingly dangerous. At the same time, the desire to cut corners will likely increase too.

How to square that particular dilemma deserves urgent attention. I’ll come back to that point in later newsletters.

4.) Video from last Saturday’s event: The coming pandemic

Last Saturday’s event, Mental distress – the coming pandemic? was the first London Futurists event for some time to be held on the Zoom Webinar platform.

We moved there since the alternative Crowdcast platform seemed too prone to occasional server-side performance issues.

As it turns out, there were occasional audio and video glitches with Zoom Webinar too, though overall the experience appears to have smoother. What’s more, Zoom seems to have addressed some of the security concerns which previously raised red flags. So we’re sticking with Zoom for the time being.

Here’s a video recording of the event:

Many thanks are due to the panellists, Stefan Chmelik, Anna Gudmundson, Dzera Kaytati, and Martin Dinov, for sharing their insights and ideas with us. There’s lots to savour in what was said.

The description notes for the video contain links giving more information about items mentioned during the discussion, or which were placed into the text chat by participants. Anyone interested in improving mental wellbeing will find plenty there worth following up.

5.) Community fundraiser: Victor Bjork

I’ve known Victor Bjork for a number of years, and admire him as a researcher with special talents in the field of rejuvenation research.

He recently graduated from Uppsala University, Sweden, with a master’s degree in molecular biology.

I’ll let LongeCity take up the story of what happened next:

Lockdowns associated with the Coronavirus pandemic are affecting rejuvenation research and the lives of researchers – recent molecular biology graduate Victor Bjork among them.

For years while Victor pursued his studies he has been active in organizations promoting research and activism for longer and healthier lifespans – including many contributions to the LongeCity community. After obtaining his degree Victor, originally from Sweden, was thrilled to be recruited to intern at an aging research company in the San Francisco Bay Area. Now, because of Covid-19, his engagement had to be terminated and Victor’s visa is in peril.

With the goodwill of supporters, we hope to raise enough funds to enable Victor to stay and work with one of LongeCity’s Affiliate Labs in the area, OpenCures.

OpenCures, the latest venture by former LongeCity Director Kevin Perrott, helps self-directed researchers access cutting-edge tools such as mass spectrometry to self-evaluate their biomarkers. Classified as an essential biotech, OpenCures is not restricted by the current lockdown provisions. At OpenCures, Victor could not only gain valuable experience and training but also help with a key project for LongeCity, BASE:

- Biomarkers of Aging Self Experimentation (BASE) aims to advance longevity science by collating data from scientific sources and personal test results in a curated community format.

- The project will empower LongeCity members to analyse and understand their own data, recruit new ‘citizen scientists’ to the community, and provide a valuable open source reference for researchers.

The stated fundraiser goal is $13,000. LongeCity will MATCH each donation – for a total of $26k!

This fundraiser will cover the minimum expenses for Victor’s lawful employment at OpenCures for the remainder of his visa and the technical and conceptual development of the BASE project. Funds will be collected by LongeCity and transferred to OpenCures as a research grant.

Donations to support Victor’s work can be made here via PayPal or credit card. You’ll see that donations made recently range from $5 up to $1,000. Every contribution helps!

Note: LongeCity (Longecity.org/ImmInst.org) is an international, not-for-profit, membership-based organization. LongeCity’s approach to supporting regenerative science has long been characterized by small-scale, high-impact projects sourced and steered by and in connection with its community. Years before ‘crowdfunding’ and ‘citizen science’ became well known concepts, they were practised at LongeCity. You can read about some of their previous initiatives here and here.

// David W. Wood

Chair, London Futurists