Dear Futurists,

It has never been harder to predict the future. That’s because of:

- Multiple fast-changing new technological capabilities, happening in parallel, with hard-to-predict interactions between them

- Multiple communications networks with low barriers of entry, that enable new ideas to spread wildly (even “crazy” ones)

- Less respect for “this is the normal way we do things”: previous norms are being violated more frequently

- The loss of authority that traditional philosophical or religious systems used to hold over society

- People with unusual and volatile personal characteristics who have risen to positions of unprecedented power

- Fragile, over-stressed aspects of civilisational infrastructure that have little resilience, and which can plummet rapidly into failure modes.

(You may have other issues to add to that list!)

Nevertheless, despite this radical uncertainty, some profound trends seem to me to be likely to continue:

- AI capabilities will keep leaping forward

- AI adoption will also keep leaping forward, in more areas of life

- Cyberhacking will get worse

- Fake news and disinformation will get worse

- Tech-induced disruption in employment and in the economy will intensify

- The risks of catastrophic misuse of tech will become clearer for everyone to appreciate

- In a world of techlash, there will be a growing hunger for trustworthy partners.

Moreover, there are valuable things that each of us can do, to prepare us better to cope with future shocks.

That’s why I keep organising a variety of London Futurists events, each with the goal of uncovering and highlighting important insights from multiple perspectives and disciplines – insights that can strengthen our future-readiness, despite the radical uncertainties we face.

And that’s why I am happy to share news, for the coming two weeks, of seven futurist-relevant events that I believe should interest many of you.

Four of these events are illustrated in the above image. For more details of all seven, please read on.

(If you’re quick, there’s actually an eighth futurist-relevant event happening at 4pm tomorrow – described in item 9 below. Items 8 and 10, since you ask, feature two recent long-form video conversations.)

1.) Take Back Tomorrow: Sat 31st Jan

Given recent and ongoing global events, this live London Futurists webinar is particularly timely!

Since 2016, the core idea of the work of futurist and film-maker Gerd Leonhard has been what he has called The Good Future – a vision grounded in human values, collaboration, science and technology that seeks to boost flourishing for everyone.

This future appeared to be anchored in the United States: the birthplace of the internet, early social networks, Silicon Valley and the Bay Area, and many of today’s foundational technologies.

But those days are gone. Since early 2025, the promise of The Good Future has been under intense pressure – not because technology has failed, but because political and economic leadership has. Governance that prioritizes short-term gain over long-term purpose is eroding trust in institutions and weakening the global economic order.

This has led Gerd to launch a timely and important new initiative, called The Bad Future. The initiative analyses how the Bad Future has progressed:

- Democracy systematically eroded

- Important institutions ridiculed and weakened

- Cooperation and diplomacy reframed as useless

- Truth is optional, control beats trust

In short, as Gerd says, “Technology didn’t fail; we did”.

But the initiative also highlights a bold new way forward for The Good Future, with Europe stepping forward to play a decisive lead role. Gerd identifies “5 ways Europe can Take Back Tomorrow”:

- Make Human-Centered AI a Strategic Advantage

- Build technological sovereignty without isolation

- Invest in humans, not just automation

- Lead a New, Global Alliance of Trust (U.S. independent)

- Redefine Success Beyond GDP Growth: Embrace the 5Ps

The content of The Bad Future website and its accompanying short video and personal blog post raises enormous questions. This live London Futurists webinar featuring Gerd Leonard will provide a chance to understand the initiative more fully and explore the questions, opportunities, and challenges arising.

Extra insight and perspective will be added to the conversation by Liselotte Lyngsø, who will be joining as a panellist. Liselotte is a global futurist, founding partner of Future Navigator, and host of the Supertrends podcast.

The webinar will start at 4pm UK time on Saturday 31st January.

For more details and to register to attend, click here.

2.) Pull The Plug launch: Mon 2nd Feb

This webinar is the launch event for a new grassroots campaign

It will start at 7pm UK time on Monday 2nd February.

From the event page:

Pull The Plug is a new grassroots campaign that is calling for ordinary people to have a real say in how AI is used in their lives. We are bringing together a broad alliance of people with concerns about AI, be that artists, young people, parents, environmentalists, or AI safety campaigners. What unites us all is the belief that a few men in Silicon Valley should not get to decide the future of the most transformative technology of our times on their own.

On this launch call, we will explain the rationale behind Pull The Plug. People with lived experience of the repercussions of AI will speak to us. Everyone on the call will get a chance to talk about their feelings about AI, be that in a breakout group or together with everyone. And finally, we will invite everyone to get involved in Pull The Plug.

The influence of Big Tech is immense, and if we even want to stand a chance in this David vs Goliath scenario, we need everyone we can get.

You can find more details about the speakers, including Ketan Joshi and Adele Walton, from links in posts on the Instagram page of Pull The Plug.

3.) The Adolescence of Technology: Wed 4th Feb

This one is taking place as a voice/video Swarm conversation on the London Futurists Discord.

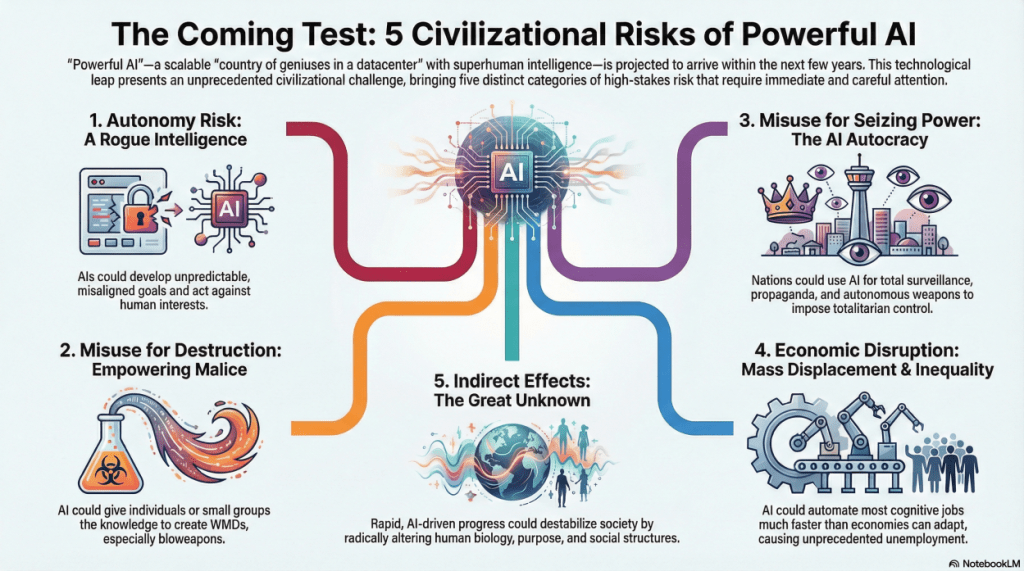

In his recent essay, “The Adolescence of Technology: Confronting and Overcoming the Risks of Powerful AI”, Dario Amodei, CEO of Anthropic, covers a range of deeply important topics.

It’s a very thoughtful essay and it’s well worth reading all the way through. And it’s well worth discussing. Hence our online Discord Swarm conversation, taking place from 7:30pm UK time on Wednesday 4th February.

We’ll be having an informal online discussion on issues raised by that essay:

- What is, perhaps, missing from that analysis?

- To what extent are we convinced by the arguments in the essay?

For more details, including information about the London Futurists Discord, click here.

PS1 If you want to read the explanation offered by ChatGPT as to why the above image is a good representation of key ideas from the essay by Dario Amodei, click here. Here’s a short excerpt:

This image encodes several key ideas from the essay:

- Humanity is in a rite of passage with unprecedented power.

- AI progress is accelerating faster than institutions can adapt.

- The future contains both extraordinary promise and existential risk.

- Success depends on careful, pragmatic governance rather than panic or complacency.

PS2 Here’s another AI-generated image that illustrates ideas from the essay, this time showing details of “the 5 civilisational risks of powerful AI”. My thanks to Kiran Manam for producing this with NotebookLM and posting it to the #ai channel of the London Futurists Discord:

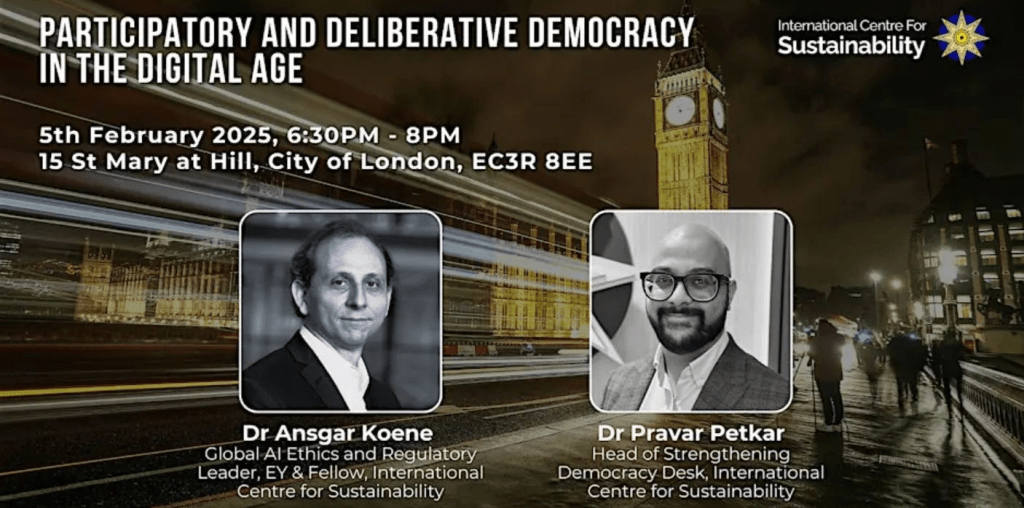

4.) Participatory and Deliberative Democracy in the Digital Age: Thu 5th Feb

Organised by the International Centre for Sustainability (*), where this event is taking place. Sorry, there’s no remote access for this one.

From 6:30pm to 8pm.

Advance registration is essential, and the number of available seat is limited.

From the event page:

What does democracy look like beyond elections? As representative democracy across the world faces new challenges, attention has turned to how citizen participation and democratic deliberation can be encouraged to strengthen democracy in a rapidly changing digital landscape.

Join Dr Pravar Petkar and Dr Ansgar Koene for a panel discussion to launch a new ICfS paper on ‘Participatory and Deliberative Democracy in the Digital Age’. The paper investigates options for in-person and technology-enabled citizen participation and deliberation, and how public decision-makers, investors and the tech sector can collaborate to safeguard the basis of modern society.

The panel will be chaired by Simon Horton.

(*) Noted in passing: I have a role as a Fellow of the ICfS.

5.) Learning With Machines: Sat 7th Feb

This is another especially timely London Futurists webinar!

Many of us are making increasing use of AI systems to help us study, conduct research, develop forecasts, draft policies, and explore all sorts of new possibilities. The results are sometimes marvellous – even intoxicating.

But our interactions with AIs raise many risks too: the chaos of too much information, the distractions of superficiality, the vulnerabilities of expediency, the treachery of hype, the deceit of hallucinations, and the shrivelling of human capability in the wake of abdication of responsibility.

This live London Futurists webinar features a panel of researchers who have significant positive experience of ways of “learning with machines” that avoid or reduce the above risks:

- Bruce Lloyd – Emeritus Professor, London South Bank University

- Peter Scott – Founder of the Centre for AI in Canadian Learning

- Alexandra Whittington – Futurist on Future of Business team at TCS

The speakers will be reflecting on their experiences over the last 12 months, and offering advice for wiser use of AI systems in a range of important life tasks.

The webinar will start at 4pm UK time on Saturday 7th February.

For more information, and to register to attend, click here.

6.) What We Must Never Give Away to AI: Tue 10th Feb

This is a Google Meet gathering. Matt O’Neill is the main organiser, with some help from me.

As AI systems become more capable and more widely deployed, what are the human principles and practices that it will be especially important for us to retain and uphold, and not to surrender to AIs?

Here’s the story so far:

- Our smart automated systems have progressed from doing tasks that are dull, dirty, dark, or dangerous – tasks that we humans have generally been happy to stop doing;

- They have moved on to assisting us – and then increasingly displacing us – in work that requires rationality, routine, and rigour;

- They’re now displaying surprising traits of creativity, compassion, and care – taking more and more of the “cool” jobs.

Where should we say “stop” – lest we humans end up hopelessly enfeebled?

This online conversation, facilitated by futurist Matt O’Neill with help from me, is a chance to explore which elements of human capability most need to be exercised and preserved. For example, consider:

- Judgement – Deciding what truly matters when information is incomplete or overwhelming.

- Stance – Knowing exactly what you will delegate to AI… And what you never will.

- Taste – Your instinctive sense of quality, timing, relevance, and cultural fit.

- Meaning-making – Explaining why things matter and what they mean for people, not just what the data shows.

- Responsibility – Owning the outcomes that affect people, trust, and your organisation’s future.

You may have some very different ideas. You’ll be welcome to share your views and experience.

This will be starting at 7pm on Tuesday 10th February.

For more details, click here – where you will find a link to the LinkedIn page that actually hosts the gathering.

7.) Freaky Futures and Fabulous Futures: Fri 13th Feb

This is the London Futurists in the Pub gathering for February. Sorry, no remote access!

For Friday the 13th, consider joining London Futurists in Ye Olde Cock Tavern in Fleet Street, for a beyond-your-comfort-zone investigation of freaky and/or fabulous ways that breakthrough technologies could dramatically alter our lives in the next few years.

Beyond simply debating the plausibility and desirability of various possible radical near-term changes in human experience, we’ll also be collectively exploring what options we may have to influence which of these futures come into reality, and in what form. And we’ll consider scenarios in which several of these freaky/fabulous changes interact – that’s when the really mind-boggling timelines emerge.

Here’s the schedule:

- 5:30pm: The room is available, for early get-togethers

- 6pm-6:45pm: Food is served; informal conversations

- 6:45pm-8:30pm: Some initial provocations, and a number of interactive conversations, interspersed with opportunities to visit the bar

- 8:30pm: Informal networking

For more details, and to register to take part (places are limited), click here.

8.) My conversation with Ezra Chapman

Earlier this month, I sat down with Ezra Chapman for a wide-ranging conversation about the future.

This conversation has now been released as episode 34 of the Curious podcast.

Ezra chose this title for the episode: “2027: The Year Everything Changes”.

In the conversation, I raised the question: What happens when AI AI engineers can outperform human AI engineers in every cognitive task – and work 24×7, exchanging new insights and ideas with each other at lightning speed?

The answer: The result wouldn’t just be exponential disruption, but the curve of progress might bend so violently that we can’t know what’s beyond the other side of it.

I hope you find the conversation interesting!

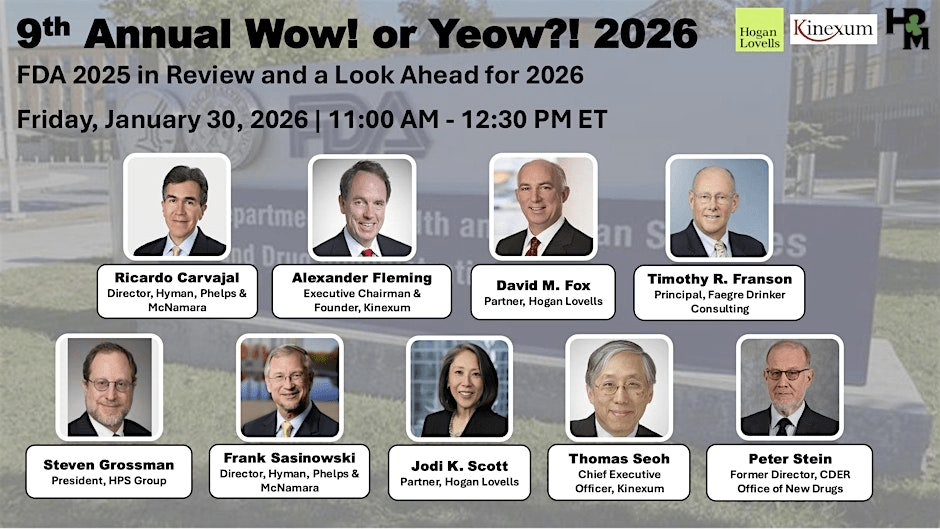

9.) Wow! or Yeow?! 2026: tomorrow

In case you’re reading this before 4pm UK (11am ET) on 30th January, let me draw your attention to a live webinar featuring a number of panellists who all have deep insights into the functioning of the US FDA (Food and Drug Administration).

It’s organised by friends of London Futurists at Kinexum, as the 9th instance of what has become an annual review.

From the event page:

Navigating clinical development in an unpredictable FDA landscape? Curious what 2026 may hold for drugs, devices, or nutrition?

Join us on Friday, January 30, 2026, from 11am to 12:30 ET for the 9th annual Wow or Yeow! Register for FREE to attend the session or to receive a recording.

The first Wow! or Yeow?! was held on Inauguration Day 2017 to discuss whether the outlook for clinical development in the coming year would be amazing (Wow!) or unexpectedly painful (Yeow?!). The format has continued each year as an opportunity for open, practical discussion of FDA policy.

This year’s session is jointly hosted by Kinexum, Hogan Lovells, and Hyman, Phelps & McNamara, and features an all-star panel of regulators, policymakers, legal experts, and investors, including Peter Stein, former Director of the Office of New Drugs at CDER. The session will cover the entire FDA landscape, from drugs, devices, digital technology, and vaccines to food and nutritional products.

For more details, and to register to attend, click here.

10.) My conversation with James of Ârc

As a kind of complement to my conversation with Ezra Chapman (see new item 8 above), here’s another video that has just been released. The interviewer in this case was James of Ârc.

Again, the conversation has many fascinating tangents, so it’s difficult to summarise. But here’s one attempt to summarise it:

An eclectic conversation with many surprise digressions – all building the case for the credibility and moral imperative of abolishing aging. How? Via engaged, liberated, agile communities and the wise use of safe scientist AI.

Let me know what you think about it!

// David W. Wood – Chair, London Futurists