Dear Futurists,

1.) Experiments with AI

I start this newsletter with an experiment. “Imagine a map of the world highlighting London, Paris, and Riyadh”, I asked the Midjourney AI.

I thought that such an image would be a good lead-in to a reminder about our event taking place tomorrow evening, which is described as follows:

Events with futurist significance are happening all around the world – including the AI Action Summit in Paris (10-11 Feb) and the Global Healthspan Conference in Riyadh (4-5 Feb). This meetup provides a chance for futurists to gather in a pub to discuss some of the implications of these events. The conversation will be led by some speakers who attended these events, and by others who observed them from afar.

I’ve learned to expect to have to iterate several times with Midjourney to obtain a satisfactory image. But the images the AI offered me took me by surprise – in a bad way. Here are two of them:

Then I thought I would try ChatGPT instead. But the outcome was equally frustrating:

That was despite me giving an additional prompt to ChatGPT, “Ensure the names of places are in geographically accurate places”.

This neatly encapsulates some of the paradoxes of contemporary AI systems. Sometimes they are shockingly stupid. But on other occasions, they can be shockingly profound. We humans can err by putting too much trust in AIs, but we can also err by imagining AIs to be fundamentally incapable.

I had a similar pair of reactions after completing a draft of a chapter for a forthcoming book. For the first time, I fed a piece of writing of mine into ChatGPT, and asked for some suggestions to improve it.

Some of ChatGPT’s suggestions were profound, and my chapter has definitely improved as a result. But others were inane and misleading – including hallucinating references to articles that (as far as I can tell) don’t exist.

(I’m glad that I checked!)

When the output of an AI is an image, or suggestions to alter the content of a book chapter, mistakes generally aren’t fatal. Instead, they’re a source of amusement – as well as frustration. But when AI is plumbed more deeply into society’s critical infrastructure, we need to become a lot wiser.

That was part of the rationale for so many leaders gathering in Paris over the last few days – leaders from politics, technology, business, and civil society groups. What advice can we give each other in order to get the best out of AI, and to avoid the worst?

2.) News from Paris and Riyadh – tomorrow evening

That takes me back to our “London Futurists in the Pub” gathering tomorrow evening.

You can read the details here – and register to attend, if there are any spaces left.

Richard Barker will be kicking off a discussion about what happened at the Global Healthspan Summit in Riyadh (which I also attended). After a break, we’ll switch to discussing what happened at various events and side-events in Paris, all on the theme of AI safety. I’ll be kicking off that part of the conversation. In both cases, we’ll be ready to feed in observations and questions from anyone else who attended either event, or who followed the proceedings online.

Let me emphasise three points:

- Everyone who attends will be invited to say a few quick words about themselves during the opening part of the event, and to mention one item of news from the previous month which they thought was particularly significant or interesting. Please come prepared!

- Everyone who plans to order some food to eat during the event should order it from the downstairs bar straight away after their arrival. The venue has limited kitchen facilities, so it will be first come first served.

- As on previous occasions, we’ll be upstairs, but you should order your drinks from the bar downstairs.

Finally, note that this is an in-person meeting only, with no recording or live-streaming.

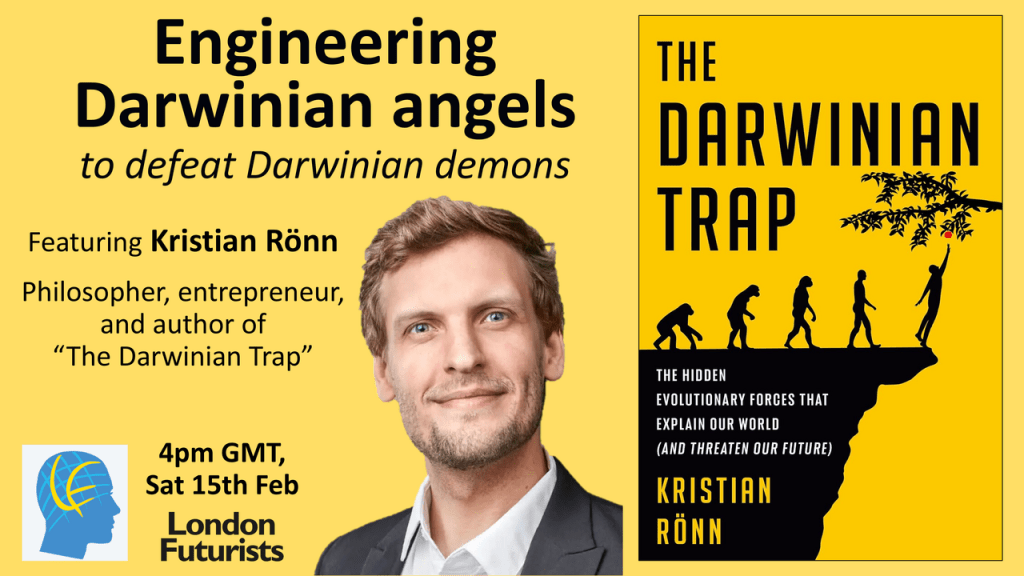

3.) Engineering Darwinian angels, with Kristian Rönn

On Saturday, we’ll have a different kind of event – an online webinar, accessible from anywhere in the world that has Internet connectivity.

Our speaker in this case will be Kristian Rönn, the author of the book The Darwinian Trap: The Hidden Evolutionary Forces That Explain Our World (and Threaten Our Future).

It’s an extraordinary book, which highlights the problems with the incentive structures that surround us.

Why do people sometimes do bad things? We may blame them for bad behaviour, bad philosophy, bad psychology, and so on, but from another level of analysis, we should expect that kind of bad behaviour, bad philosophy and bad psychology to continue until destructive incentives are removed from our environment.

Rönn links this analysis with some venerable arguments in evolutionary biology. Does natural selection promote the survival of the fittest organisms, the survival of the fittest genes, or the survival of the fittest species? What happens when the best outcome of a species requires individuals to make lots of sacrifices? Well, sometimes groups can rise above what would happen if individuals just pursued narrow self-interest. How does this arise?

Rönn goes on to compare potential “top-down” and “bottom-up” solutions to the three existential problems he describes: an out-of-control race for resources (including energy), and out-of-control race for power (including nuclear weapons), and an out-of-control race for intelligence (including AI). He argues that we’re going to need elements of both top-down (centralized) and bottom-up (decentralized) influence on markets.

I strongly recommend you find the time to join this Zoom webinar this Saturday. That will allow you to participate in the live conversation.

For more information, and to register to attend, click here.

// David W. Wood – Chair, London Futurists