Dear Futurists

It’s sometimes encouraging but it’s often exasperating.

I’m referring to the ongoing public discussion about the possible arrival of AGI – Artificial General Intelligence.

Some of the material that has recently been published reaches new heights of quality. Consider, for example, the excellent review article “An Overview of Catastrophic AI Risks” written by three researchers at the Center for AI Safety – Dan Hendrycks, Mantas Mazeika, and Thomas Woodside. Here’s the abstract for that article:

Rapid advancements in artificial intelligence (AI) have sparked growing concerns among experts, policymakers, and world leaders regarding the potential for increasingly advanced AI systems to pose catastrophic risks. Although numerous risks have been detailed separately, there is a pressing need for a systematic discussion and illustration of the potential dangers to better inform efforts to mitigate them.

This paper provides an overview of the main sources of catastrophic AI risks, which we organize into four categories: malicious use, in which individuals or groups intentionally use AIs to cause harm; AI race, in which competitive environments compel actors to deploy unsafe AIs or cede control to AIs; organizational risks, highlighting how human factors and complex systems can increase the chances of catastrophic accidents; and rogue AIs, describing the inherent difficulty in controlling agents far more intelligent than humans.

For each category of risk, we describe specific hazards, present illustrative stories, envision ideal scenarios, and propose practical suggestions for mitigating these dangers.

Our goal is to foster a comprehensive understanding of these risks and inspire collective and proactive efforts to ensure that AIs are developed and deployed in a safe manner. Ultimately, we hope this will allow us to realize the benefits of this powerful technology while minimizing the potential for catastrophic outcomes.

But in parallel, there are also plenty of examples where people remain determined to deny that AI is becoming more general, more capable, or more intelligent. One writer, declaring themselves to have “over 30 years’ experience in AI”, unblushingly asserted on LinkedIn earlier this week that

There has not been a breakthrough in AGI since the 1990s at least – systems have just become bigger and faster, which is not the same as more intelligent.

And, far too often, writers assert that because they personally believe collaboration to be the highest virtue, we can rest assured that any superintelligent AI that emerges will decide that collaborating with humans is the best possible use of its resources – notwithstanding the observation that, to be blunt, even the best of us humans put little effort into a grand collaboration with ants and termites.

What can be done to steer this conversation away from the inanities and self-deluding ignorance, toward a clearer perception of the opportunities and risks that lie ahead?

Read on for some suggestions.

1.) Peter Scott visiting London Futurists this Saturday

Peter Scott is the host of the renowned podcast “Artificial Intelligence and You”, which has released over 160 episodes since its launch in June 2020. The theme of that podcast is “What is AI? How will it affect your life, your work, and your world?” Peter’s guests include a who’s who of AI pioneers, experts, analysts, and critics, as well as some lesser known names with perspectives that are unusual but important.

I find Peter to be not only exceptionally well informed but also friendly and diplomatic. He brings a calm thoughtfulness to discussions that could easily become polarised, regarding the future of AI.

This Saturday, the 12th of August, Peter will be visiting London Futurists in our usual real-world meeting location in Birkbeck College, to bring us up to date with what he has learned from his guests and his own recent research – particularly on the growing capabilities of generative AI systems and the potential creation of AGI. Peter’s presentation will be a prelude to a wide-ranging group conversation that will explore options on the most critical questions about AI and AGI.

We’ll be in lecture room 532 on the 5th floor of Birkbeck’s main building. Birkbeck is maze-like in places, so I suggest arriving at the reception at least 10 minutes before the official start time of the event, namely 2pm.

To register for this event, click here.

Later that afternoon, from 4.15pm onwards, we’ll be moving to the nearby Marlborough Arms pub, for opportunities for wider discussion of all matters futurist. To register to attend that part of the afternoon, please follow this link.

(You’ll find there’s a small charge to register for the Birkbeck part of the afternoon – that’s to help cover our room hire costs. But there’s no charge to attend the Marlborough Arms part.)

2.) Creating and exploring AGI scenarios – 26th August

Whilst I have high hopes that we’ll all leave this Saturday’s events smarter than at the start of the day, I appreciate that there are so many angles to the future of AGI that there will bound to be some questions left over.

Therefore, the discussion will continue, in a different form, on Saturday 26th August.

On that date, our meeting will be in an online tool we’ve not used before: Remo, as provided by Telepresent.

This will be less of a “webinar” and more of a collaborative participation. Here’s the draft schedule:

15:40 – System opens, ready for informal online networking

16:00 – Scene-setting presentations and general questions

16:25 – Audience split into groups, to develop their own ideas about the evolution of AGI

16:50 – Groups report their findings to the plenary

17:15 – General discussion

17:30 – Ongoing informal networking, for those who wish to stay

You’ll find full details of both the meeting and the tool via this link.

On this occasion, as it is our first experiment with Remo, there will be no charge to register or take part. Participants will be welcome from all around the world.

3.) Save the date: TransVision Utrecht

(Image credit: 0805edwin from Pixabay, used with thanks.)

The Dutch city of Utrecht is home to what was for many centuries one of the tallest man-made structures on the planet, namely the Dom Tower. The city is also the venue of TransVision Utrecht, which will be taking place on the weekend of 20th and 21st January.

There’s not much to say about this event at the moment, except to point out that Utrecht is relatively close to where the first ever TransVision conference took place, back in 1998, in the Weesp area of Amsterdam.

But please make a note of that date in your calendars, since it will be a great opportunity to meet with transhumanists, futurists, and technoprogressives from near and far.

And in case you live in Holland and may be able to assist with the planning and management of this event, don’t hesitate to get in touch.

4.) What’s new in longevity

If January is too long to wait to network with numerous international transhumanists, futurists, and technoprogressives, why not travel to Dublin for the Longevity Summit Dublin taking place there next week (17-20 August)?

Every time I look at the page of speakers for this Summit, I find even more names and photos there.

The main organiser of the summit, Martin O’Dea, was the guest on the most recent episode of the London Futurists Podcast. Whether or not you are planning to attend that summit, you’ll find plenty of interest in Martin’s remarks in the episode. Click on the picture below to access the episode.

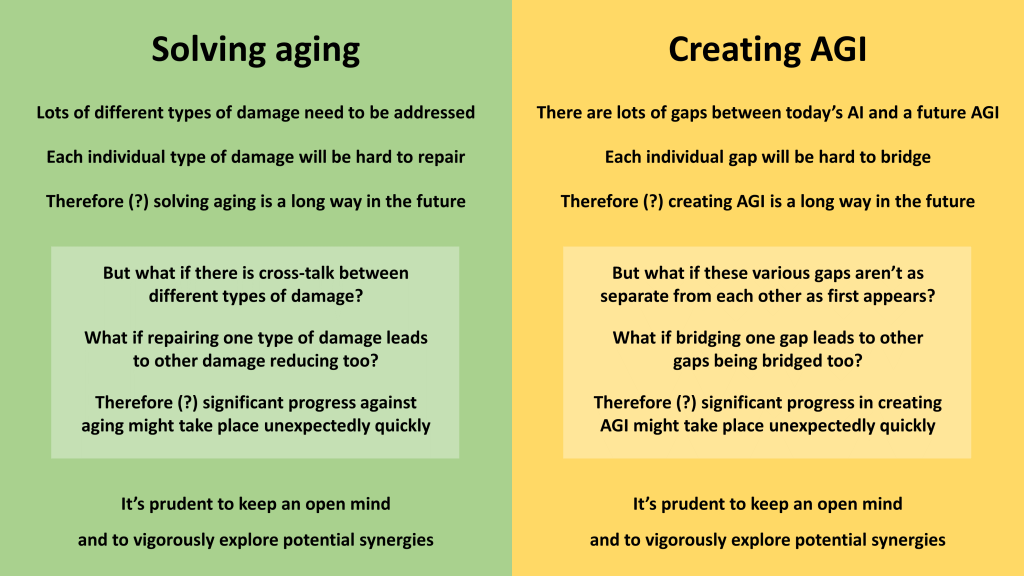

5.) Creating AGI and solving aging

Is there a possible connection between creating AGI and solving aging?

You bet! Indeed, there are multiple potential connections, as I discovered from online comments made in various social media platforms in response to what has turned out to be my most popular post in the last week or so:

I won’t repeat all these comments here. But I will say (to refer back to my comments at the start of this newsletter) that these comments mainly landed on the encouraging end of the spectrum rather than the exasperating end.

In other words, there are still many reasons to be hopeful! Let’s keep our minds open, and vigorously explore potential synergies.

// David W. Wood

Chair, London Futurists