Dear Futurists,

I write these words from the opening day of PauseCon Brussels – an event with the headine title “From Risk to Responsibility: Join the Strategic Summit for a Global AI Pause”. Attendees have gathered from all over Europe – and in some cases from further afield.

1.) The AI Motivation Paradox

Tomorrow (Sunday), I’ll be leading a workshop at the event on the subject “The AI Motivation Paradox”:

This is from the specification for that workshop:

There are compelling arguments for urgently pausing the development of next generation frontier AI systems. Yet most of us do not act consistently with the implications of those arguments. Instead, we reach for convenient rationalisations or quietly relegate these concerns to the margins of our attention. What’s going on?

This is not primarily a failure of logic. It is a matter of psychology and sociology. Across business, public policy, and personal life, we humans are notoriously poor at recognising and responding to systemic catastrophic risks. Major change initiatives frequently falter – not because the evidence is weak, but because inertia, status-quo bias, fragmented incentives, and social conformity blunt our response. Even when risks are grave, disruption to familiar routines can feel more threatening than the danger itself. We prefer to say to each other, Don’t look up!

This workshop reviews examples of both failed and successful change efforts, distilling lessons about collective transformation under conditions of uncertainty and resistance. Expect some soul searching.

Participants will leave with practical insights into how to strengthen the social, institutional, and cultural forces capable of constraining reckless AI development – and how to overcome the psychological and social barriers that so often prevent timely action

For those of you who aren’t in Brussels, I plan to record another version of this workshop shortly, and to upload the recording for wider access.

2.) A demonstration outside the European Parliament

To be clear: PauseCon isn’t just a talking shop. The event is leading up to a demonstration outside the European Parliament, on Monday afternoon, calling for the EU to initiate negotiations for a global treaty to pause the development of new frontier AI models.

If you’re in or near Brussels, I encourage you to join that demonstration. It’s taking place from 15:30-16:30 Brussels time. You can find more details here.

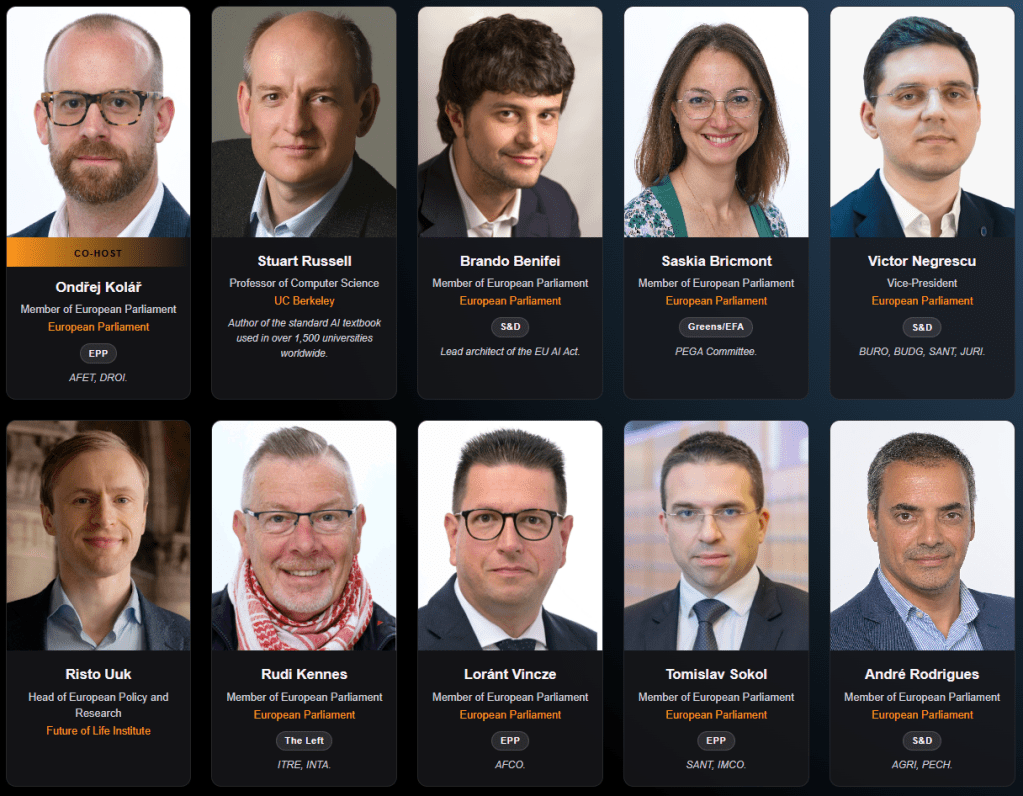

Earlier the same day, attendees from PauseCon will be joined inside the European Parliament building by a number of distinguished MEPs, to hear a number of keynotes and to take part in a panel discussion.

It appears that a political window is opening: more and more parliamentarians seem ready to listen to the argument that what has previously been a headlong race to build superintelligence should be subject to a global, verifiable moratorium.

3.) AI Safety March in London (28th Feb)

What about folk who aren’t in Brussels, but who are alarmed about the lack of binding guardrails for new frontier AI models?

Well, if you’re in or near London, I have some good news for you. What will very likely be the largest ever AI Safety March in the UK will be taking place next Saturday, 28th February:

For details of how to join that march – starting at noon at 207 Pentonville Road – see this Luma page.

Here are some extracts from that page:

Safety before Superintelligence

PauseAI UK is joining Pull the Plug, Assemble and other groups for the first-ever coalition march of AI safety and ethics grassroots groups. This will be the first time that a range of organisations with different concerns and perspectives on AI come together around shared goals. We also expect it to be the largest protest to date focused specifically on AI safety and governance.

There will be testimonies from people already affected by AI systems and speeches from researchers and technologists who work closely with the technology. At the end, we will hold an assembly to reflect on public concerns about AI and what responsible development should look like.The march will be non-violent, legal, and coordinated with the police to ensure it is accessible and family-friendly.

After the protest most people will go to the assembly, where food and refreshment will be provided. We will also book a pub venue in case there isn’t enough space at the assembly or some people are not feeling up for it.

PauseAI UK’s Demand

We do not yet know how to safely develop advanced AI systems. Despite this, companies are investing billions of dollars to scale these systems as quickly as possible, while regulation and safety research fail to keep pace. Most people, including many working in AI, want stronger safeguards. But no single company or country will slow down while others race ahead. Public pressure is what breaks that deadlock and opens the door to democratically championed coordination and oversight.

A necessary step toward a global treaty is for the CEOs of the top AI companies to publicly state that they support a pause in principle. This means they would support a pause if every other player also paused at the same time, with independent auditors monitoring each company and country to ensure the treaty was honoured.This demand is already gaining traction. Demis Hassabis, CEO of Google DeepMind, has publicly stated he would support a pause in principle. The next step is making this position impossible for other AI company leaders to avoid.

At this protest, we will gather outside OpenAI and march through King’s Cross, where several major AI companies are based, to make this demand clear: We call on all top AI company CEOs to publicly support a pause in principle as a step toward binding international regulation.

Find out more about PauseAI UK and get involved at https://pauseai.uk/

Find out more about Pull the Plug at https://pulltheplug.uk/

The march could be a historic turning point: don’t miss it!

4.) From Bad to Bright Future

Maybe you think there’s more to preventing a bad future (such as unaligned superintelligence, and/or runaway climate change) than going on marches and contacting our elected representatives.

You would be right.

To consider the bigger picture, London Futurists will be hosting an online “swarm” conversation in a Zoom meeting room, from 7:30pm UK time on Wednesday 25th February, with the title “From Bad to Bright Future: How to make it happen”.

From the meetup page describing this event:

There are many near-future scenarios in which worrying trends in the present make all our lives much worse in the years ahead. This situation has been called “The Bad Future”. In this online event, Tony Czarnecki will be leading a conversation he calls “From Bad to Bright Future: How to Make it Happen”.

I hope you’ll be able to join one or more of the events listed above!

5.) The Economics of Abundance (video recording)

To end this newsletter on a more positive note:

It’s my belief that if we handle technology wisely – nanotech, biotech, infotech, cognotech, and more – we could soon reach a global situation of sustainable abundance for everyone.

I acknowledge that this idea provokes many reactions. Some people say it’s a deluded utopian fantasy. Some say it’s irresponsible to even entertain the idea. Others wonder about practical aspects: what might happen to the economy, to finance, to human communities, to democracy, to scarce real estate, to the environment, to the human sense of meaning, and so on.

That was the topic discussed in a London Futurists Swarm event, held on Wednesday 11th February: “The Economics of Abundance: Achieving it and Sustaining it”.

A rich conversation resulted, leading me to significantly extend and update the presentation I initially shared at that meeting. This video contains a performance of that revised presentation:

// David W. Wood – Chair, London Futurists