Dear Futurists,

If we don’t like the future that seems to be on our doorstep, what can we do about it?

In our most recent webinar, last Saturday, Gerd Leonhard pointed out features of what he calls “The Bad Future”. (You can watch the recording of that webinar here. Or view Gerd’s slides here.) These features include short-termism, isolationism, and gunboat diplomacy. In that future, transactions are valued higher than trust, power is valued higher than purpose, and technology is valued higher than humanity. Institutions are ridiculed. Diplomacy is seen as weakness. Truth is optional.

I ask again: if we don’t like that future, what can we do about it?

One answer is: not much. Each of us, as individuals, can make only a miniscule dent on the grand trajectory of human civilisation.

But another answer is: a great deal. Not when we act as individuals. But when we find extra strength in collaboration.

That collaboration can come in many forms. It can be coordinated action, with many people all pulling in the same direction together, so that our voices are magnified. It can be coordinated learning, with people sharing with each other the most valuable insights and skills they have discovered. And it can be coordinated recovery, with people providing timely encouragement and moral support to each other.

For more about how London Futurists aspires to enable all three of these forms of vital collaboration, read on.

1.) March for AI Safety – Sat 28th Feb

On the theme of extra strength from coordinated action, here’s the view of friends of London Futurists in PauseAI UK:

We do not yet know how to safely develop advanced AI systems. Despite this, companies are investing billions of dollars to scale these systems as quickly as possible, while regulation and safety research fail to keep pace.

Most people, including many working in AI, want stronger safeguards. But no single company or country will slow down while others race ahead. Public pressure is what breaks that deadlock and opens the door to democratically championed coordination and oversight.

A necessary step toward a global treaty is for the CEOs of the top AI companies to publicly state that they support a pause in principle. This means they would support a pause if every other player also paused at the same time, with independent auditors monitoring each company and country to ensure the treaty was honoured.

This demand is already gaining traction. Demis Hassabis, CEO of Google DeepMind, has publicly stated he would support a pause in principle. The next step is making this position impossible for other AI company leaders to avoid.

Accordingly – to continue quoting from the Luma event page for the March for AI Safety scheduled for Saturday 28th February:

PauseAI UK is joining Pull the Plug, Assemble and other groups for the first-ever coalition march of AI safety and ethics grassroots groups. This will be the first time that a range of organisations with different concerns and perspectives on AI come together around shared goals. We also expect it to be the largest protest to date focused specifically on AI safety and governance.

There will be testimonies from people already affected by AI systems and speeches from researchers and technologists who work closely with the technology. At the end, we will hold an assembly to reflect on public concerns about AI and what responsible development should look like. The march will be non-violent, legal, and coordinated with the police to ensure it is accessible and family-friendly.

This short video provides more context:

Given the number of different organisations who are joining together for this march, this event could become an early tipping point for a large change in public mood.

For more information, and to sign up to join the march in London, click here.

Note: anyone wishing and able to undertake a deeper dive into the ideas and activities of PauseAI should consider whether they can attend PauseCon Brussels on Saturday 21st and Sunday 22nd February, with the option of a public march in Brussels on Monday 23rd.

Speakers and facilitators at PauseCon Brussels include many people who have thought long and hard about options for ensuring AI development proceeds safely – and people who will reflect on their experiences trying to build more effective collaboration. As part of the agenda, I’m scheduled to lead a session on the Sunday morning, described as follows:

What makes social change initiatives succeed or fail? What are the pros and cons of urgency and any sense of panic. Why are leaders and stakeholders not terrified? Why do people often see the intellectual case for Pause but have no sense of urgency?

2.) Slowing down the rush towards dangerous new forms of AI

One reason many people are opposed to the idea of a “pause” in the creation of more advanced AI is that they fear that any delay in new versions of AI will mean people having to give up many of the benefits they hope these new AIs will deliver – benefits in areas such as improved green energy, improved scientific research, and improved medicines.

That’s a concern I hear a lot, especially in connection with my interest in research to reverse aging.

I’ve therefore been inspired to write a new personal blogpost about that topic: “Removing the pressure to rush”.

As the text on the image says:

Reducing AI risk isn’t just about slowing down; it’s also about removing the pressure to rush.

The article starts as follows:

Here’s an argument with a conclusion that may surprise you.

The topic is how to reduce the risks of existential or catastrophic outcomes from forthcoming new generations of AI.

The conclusion is these risks can be reduced by funding some key laboratory experiments involving middle-aged mice – experiments that have a good probability of demonstrating a significant increase in the healthspan and lifespan of these mice.

These experiments have a name: RMR2, which stands for Robust Mouse Rejuvenation, phase 2. If you’re impatient, you can read about these experiments here, on the website of LEVF, the organisation where I have a part-time role as Executive Director.

You can read the blogpost here. I’ll welcome any feedback on it.

3.) Learning with Machines – tomorrow, 7th February

When used wisely, AI systems can be wonderfully helpful. But when we are distracted, gullible, lazy, or naïve, interactions with AI can leave us confused, misled, drained, paranoid – and more distracted, gullible, lazy, and naïve than we started.

Practical advice on obtaining good results from “Learning with Machines”, despite these very real risks, is the subject of the London Futurists webinar that starts at 4pm UK time tomorrow, Saturday 7th February. As a reminder, our panel of speakers will be:

- Bruce Lloyd – Emeritus Professor, London South Bank University

- Peter Scott – Founder of the Centre for AI in Canadian Learning

- Alexandra Whittington – Futurist on Future of Business team at TCS

For more information, and to register to take part, click here.

Note: after listening to our Discord Swarm discussion on Wednesday on the theme of Dario Amodei’s essay The Adolescence of Technology, Bruce Lloyd (one of the speakers tomorrow) went through another exercise in his own process of “learning with machines”.

As Bruce described it:

AI’s (ChatGPT, Claude, Copilot, Gemini, Grok, Perplexity, Qwen, Deepseek and NotebookLM) explore AI risks as outlined in The Adolescence of Technology by Dario Amodei. Are there any others and what needs to be done to minimise them?

Here’s the output – a set of slides produced by NotebookLM:

(If you click on the image, it should open this PDF file.)

That’s pretty impressive. If you want to hear how Bruce created it – and to discuss caveats about the process and the output – then join our webinar tomorrow!

4.) Special guests at our forthcoming Freaky and Fabulous Futures event

We are very fortunate that two distinguished international futurists will be joining our gathering on Friday 13th February at Ye Olde Cock Tavern:

- Celia Black, Co-founder and Chief Marketing Officer of Kurzweil Technologies, and long-time advisor and researcher for Ray Kurzweil

- Charlie Kam, the producer of the international TransVision 2007 conference in Chicago, the candidate of the U.S. Transhumanist Party in the US presidential elections in 2024, and the creator of many well-loved transhumanist music videos.

One example of Charlie’s creativity is I am the very model of a singularitarian (now 18 years old!), a playful adaptation of a famous song by the English composers Gilbert and Sullivan:

There’s a rumour that Charlie will be presenting a new song at our Freaky/Fabulous event – a special re-imagining of a renowned composition by another famous English tunesmith. If you attend the gathering, you’ll have the chance to vote whether it is “Freaky” or “Fabulous” (or maybe both)!

Note 1: My own better half, Hyesoon, who some of you often used to meet in our events in Birkbeck College in years past, will also be joining us on this occasion.

Note 2: If you look down the list of people who have RSVP’ed Yes for this event, you’ll see a number of other distinguished futurists from around the world. It should be a very special gathering.

5.) Midweek futurist events – Tuesday and Wednesday

Don’t forget that there will be an online Google Meet conversation on Tuesday evening, jointly facilitated by Matt O’Neill and me, with the title “What we must never give away to AI”.

And on Wednesday evening, from 7:30pm UK time, there will be another Discord Swarm conversation.

I’ve not set the topic for this one yet. I’m open to suggestions – which you can raise in the #general-chat channel in the London Futurists Discord.

The default choice will be: continue discussion of points raised during our Saturday webinar and/or the Google Meet conversation on Tuesday – and consider follow-up actions.

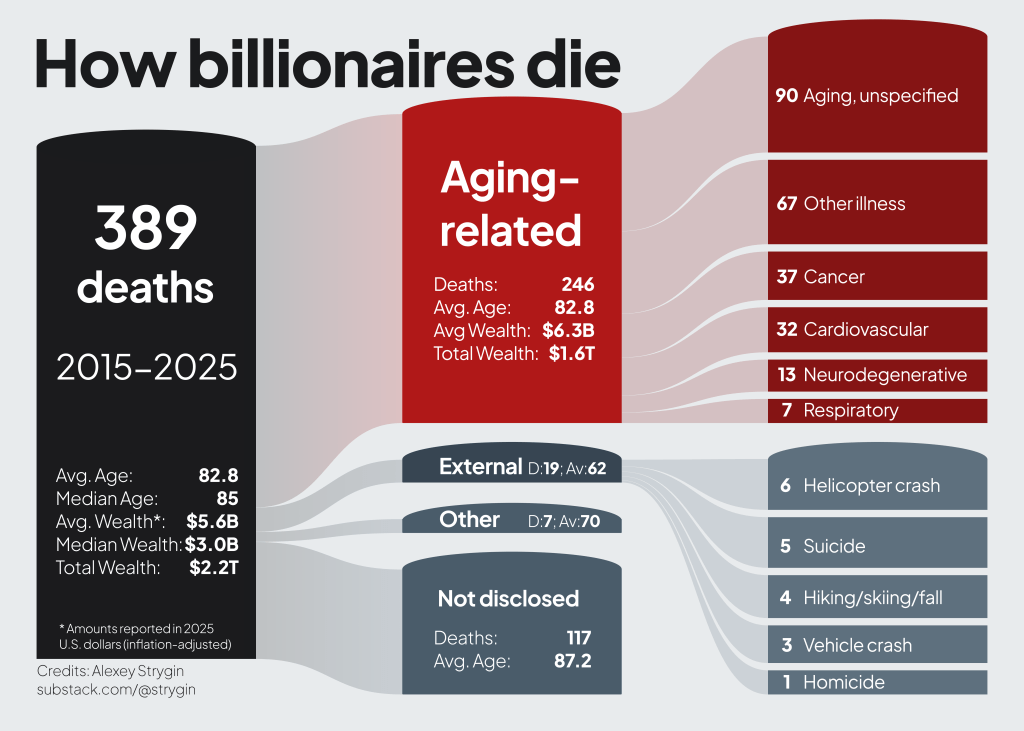

6.) How billionaires die – and what they should do about it

I’ll finish by drawing your attention to an unusual but thought-provoking piece of research by Alexey Strygin, a bioentrepreneur who is focused on advancing longevity biotechnology. Alexey is also a leading member of the Vitalism Foundation (tagline: Build the strategic network to defeat aging & death).

Here’s the opening paragraph of the article:

Imagine you’ve finally joined the club of the ultra-rich. Now it’s time to enjoy it for as long as possible, right? You can buy everything, but can you buy more time? You have access to the world’s best healthcare, personal trainers, nutritionists, and cutting-edge treatments. With all that at your disposal, you’d expect to live longer. Do you? Are there secret longevity clinics or elite interventions reserved for those who are willing to spend?

Part of the background to the article is the fairly widespread idea that billionaires allocate huge sums of money in pursuit of their own personal longevity. But as Alexey shows, that’s far from being the case.

Here’s part of his conclusion:

The best individual strategy today remains unglamorous: healthy lifestyle, top-tier health system, low risk exposure. Personal health optimization – executive physicals, concierge medicine, experimental protocols – can detect and manage disease, but the returns diminish rapidly…

Past a certain point, more spending on personal health buys nothing…

What could change the equation is not personal spending but research spending. The only historically reliable driver of longer life expectancy is technological progress. Sanitation extended life. Antibiotics extended life. Vaccines extended life. Because most causes of death today are aging-related, the next leap requires solving aging itself – and that is a funding problem as much as a scientific one.

The funding gap is staggering. Over the past decade, 246 billionaires worth $1.56 trillion died of aging-related causes. Yet the WSJ estimates that the entire ultra-wealthy class have put $5B into longevity ventures over the past 25 years (about ~$200M/year).

The average billionaire dying of aging held $6.3B – more than the field’s entire quarter-century of funding from ultra-wealthy investors. A single additional commitment at that scale could materially change the pace of progress.

To be clear, the recommendations in Alexey’s article aren’t there to indicate to billionaires what they should do in order for just themselves to have a longer life. These recommendations would result in treatments that can surely be driven down in price quickly, so that all people could, if they wish, have much longer healthspans and lifespans.

So, in case you happen to be in touch (directly or indirectly) with any billionaires, I suggest you point them at Alexey’s analysis, and follow up with the recommendation I covered in news item #2 above!

// David W. Wood – Chair, London Futurists

https://www.facebook.com/share/v/1DBWCdues1/

Kind Regards,

Graham Mcnally Agricultural Production Manager, NGIP Group of Companies Mobile: +675 7178 2993 Email: gmcnally@agmark.com.pg Trunk Line: 7411 1424 | 982 9055 Ext: 148

NGIP Haus, Level 1, Talina, Kokopo P.O.Box 1921, Kokopo, ENBP Papua New Guinea http://www.agmark.com.p http://www.agmark.com.pgg

David, et al.

https://www.facebook.com/share/r/1LTg7KpymW/

Please comment…….

Kind Regards,

Graham Mcnally Agricultural Production Manager, NGIP Group of Companies Mobile: +675 7178 2993 Email: gmcnally@agmark.com.pg Trunk Line: 7411 1424 | 982 9055 Ext: 148

NGIP Haus, Level 1, Talina, Kokopo P.O.Box 1921, Kokopo, ENBP Papua New Guinea http://www.agmark.com.p http://www.agmark.com.pgg