Dear Futurists,

How does public awareness change?

First slowly, and then quickly.

At first, for a long time, people don’t allow themselves to think various ideas, since these ideas would (they think) be disliked or even mocked by their friends, neighbours, colleagues, or family.

But when it becomes clear that these ideas do, in fact, have wider public support, a tipping point can occur. Long slow change gives way to rapid change.

I perceive that to be the case with curve of growing public awareness of the existential nature of forthcoming new generations of AI, sometimes called “frontier AI models”. I’ll come back to that subject later in this newsletter.

But I’ll start with another example of growing public awareness – a change in awareness that will be accelerated by the Dublin Longevity Declaration that was published yesterday.

1.) An unprecedented consensus statement

Here’s how the LEV Foundation announced the Dublin Longevity Declaration:

Think curing aging is impossible? Think it would be a bad idea? Think again.

In spite of ever-growing evidence to the contrary, and determined outreach by forward-thinking individuals, the majority of the world’s population remain convinced that aging, along with the disease and death it brings, is inevitable – and by extension, that attempts to combat it are unworthy of serious recognition or support.

Over the course of 2023 LEV Foundation has coordinated an effort, conceived by Martin O’Dea and Dr. Aubrey de Grey, to lay that lamentable opinion to rest – and assert instead that an immediate expansion of work to extend healthy lifespans is not only credible, but indeed crucial to the quality of our collective future.

In collaboration with primary author Professor Brian Kennedy, with input and enthusiastic endorsement from iconic researchers and leaders across the field of longevity medicine and allied fields, we are now able to publish the result of that effort – the Dublin Longevity Declaration: Consensus Recommendation to Immediately Expand Research on Extending Healthy Human Lifespans.

The authority of the renowned individuals who have put their names to the Declaration will play a vital part in achieving its principal goal: to drive an urgent, worldwide increase in resources devoted to the control of aging-related disease.

There is a second vital factor, however, in swaying the hearts and minds of those who control budgets for both public and private sources of funding: the extent of public support for that goal.

Thus, whatever your background, we encourage everyone who reads the Declaration and agrees with its message to add your signature, and encourage your friends and colleagues to consider doing the same.

Aging and its consequences affect us all. If you’re not happy about that, this is a unique opportunity to say so!

As I write these words, new signatures are arriving thick and fast at the site. It’s a pleasure to have been part of the team that made this Declaration possible.

In case you’re wondering why it is the “Dublin” Longevity Declaration: The ideas for the Declaration were discussed with speakers in the run-up to the Longevity Summit Dublin held in August 2023, where the forthcoming launch of the Declaration was also first announced.

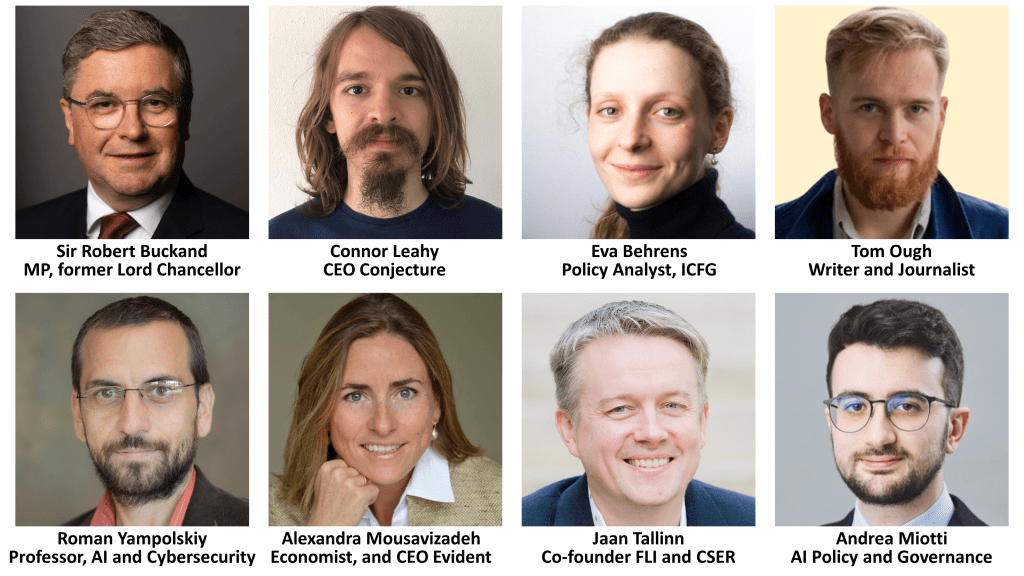

2.) More speakers and panellists for Conway Hall event, 10th October

AI used to be a relatively minor part of human life. But now it’s everywhere. And it’s becoming more powerful all the time. The potential of these technology for tremendous good – and for tremendous harm – is no longer a curiosity to be explored in a Netflix drama series or a Hollywood blockbuster. It’s something to which the world’s most powerful politicians are giving increasing attention.

An awareness that the outcome of next generation AI might have both wonderful and dreadful consequences is just the beginning. The next step is to develop realistic scenarios for how things could plausibly develop – and (critically) how the default trajectories could be steered to better outcomes.

That’s what will be discussed at Conway Hall on Tuesday 10th October. A remarkable panel of diverse thinkers will be leading the discussion there.

The event has been organised by the Existential Risk Observatory and AI alignment solutions company Conjecture, with support from London Futurists.

The evening will include a talk by Professor Roman Yampolskiy (University of Louisville), speeches by Sir Robert Buckland MP and Connor Leahy (the CEO of Conjecture), and a panel discussion hosting leading voices from the societal debate and the political realm, namely investor Jaan Tallinn (co-founder of CSER), economist Alexandra Mouzavizadeh (CEO of Evident), journalist Tom Ough, policy analyst with the International Center for Future Generations Eva Behrens, and AI and governance policy analyst Andrea Miotti (Conjecture). The moderator of the evening will be yours truly.

In addition to the presentations and panel discussion, there will be opportunities for members of the audience to ask questions.

Attendance at this event is free of charge.

For more details, and to register to attend, click here.

3.) Recording of the previous Bletchley Park preview event

The event just mentioned is part of an ongoing series of preview events taking place ahead of the global AI Safety Summit happening at the UK’s Bletchley Part on 1-2 November.

An earlier meeting in that same series took place on the 23rd September. Here’s a recording of it:

A number of people who took part emailed me afterward, saying they had found the discussion particularly helpful. I understand that at least one forthcoming independent podcast episode will offer an analysis of what was said there.

So, I recommend that you find some time to watch this recording!

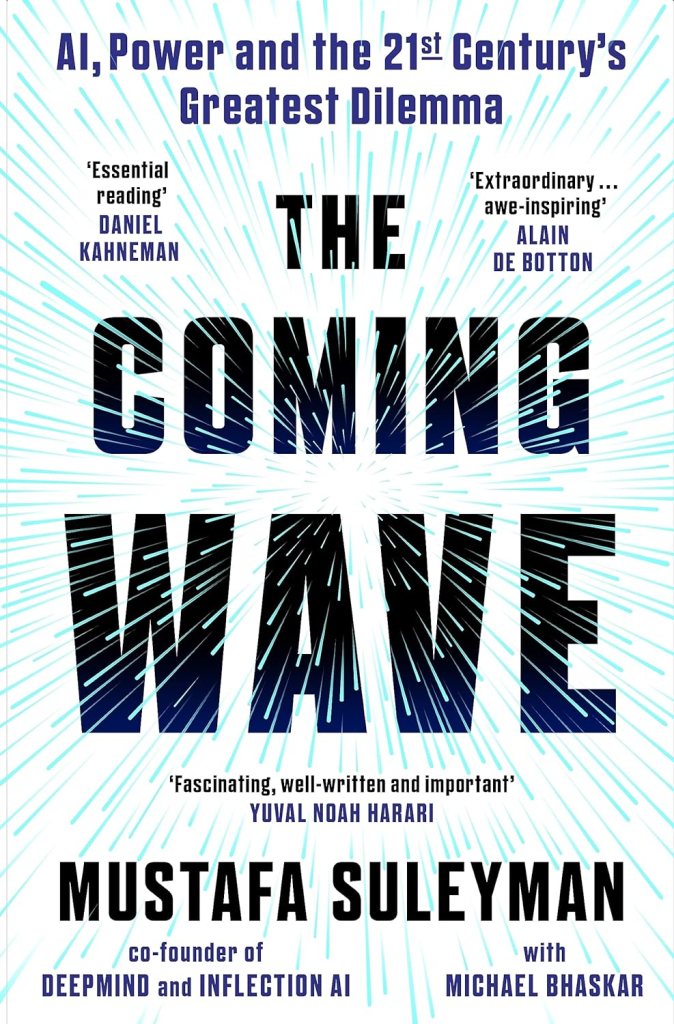

4.) The Coming Wave, by Mustafa Suleyman

Now that I’ve finished listening to “The Coming Wave: Technology, Power, and the 21st Century’s Greatest Dilemma” by DeepMind co-founder Mustafa Suleyman, I can give my verdict. It is, by some way, the best book written so far on how to handle the forthcoming deeply disruptive technological trends.

First, it provides a compelling yet chilling account of why the dilemma we will face will be so acute. The case for *containment* in these pages is incredibly strong, though at the same time, the book recognises how difficult (and *dangerous*) containment will be.

Second, the examples and narratives given throughout the book add real depth, so that the discussion never becomes academic or abstract. These are no hypothetical examples. These are examples of major risks that have already happened, credibly magnified in the near future.

But most of all, the ten-point outline solution proposed in the closing parts of the book provides an excellent starting point on which further work can be built. Forget single “silver bullet” solutions. This framework covers many different areas of life and society.

Suleyman points to work by Daron Acemoglu and James Robinson that I have also highlighted in my own writings – the pivotal concept of a “narrow corridor” between states that are too weak (and where cancers within society cause havoc) and states that have unconstrained power.

In this analysis, the existential risks posed by an over-strong state need to be met by the distributed capabilities of a vibrant, informed, empowered society. That’s true for governance in general and for the governance of “coming wave” technologies in particular. Amen.

In a couple of places, I believe Suleyman to be misguided in what he says – about whether it’s important to think about an AI superintelligence (“singularity”) that is even more powerful than the AIs of the forthcoming frontier models. But I see this as an allowable weakness.

The insider accounts of discussions within Alphabet, Google, and DeepMind, of possible alternative business models for DeepMind, are particularly thought-provoking. As Suleyman says, the important thing to do, on hearing about that history, is to consider learnings for the future.

Anyway, from now on, whenever someone asks for a careful, thoughtful, extended analysis of forthcoming AI and its implications, I’ll be pointing them at “The Coming Wave”.

// David W. Wood

Chair, London Futurists