Dear Futurists,

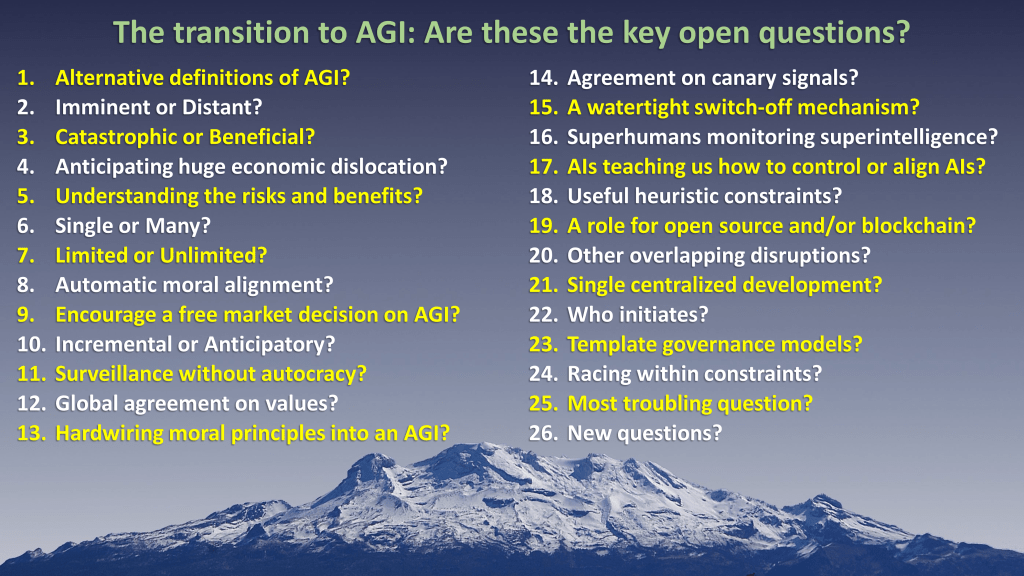

Round 1 of what will become a multi-round open survey is in progress, with its set of open questions about the transition to AGI (Artificial General Intelligence).

Positive feedback to the survey so far

Here’s some feedback from various members and friends of London Futurists who have already given their answers and comments in response to the survey:

That was a truly fascinating exercise – many thanks David! Open discussion as a method of approaching the truth began c.2500 years ago and is still valid.

Long, but not painful. Well done!

That was one comprehensive survey! Submission is in, though I’d need more time to fully address all points.

I have filled in the survey. It took me one and half hour but the journey into the future was worthwhile. When filling the survey I had mixed feelings ranging from some despair (will we be able to tame the raging immature AI and bring it into the adulthood when it will become our benevolent master) to moments of some optimism (if this survey lands in the hands of some politicians it may really get them out of their seats and do something radical and effective very soon). In any case, the survey is exceptional both in its content and the overall approach

As I proceeded through the survey, I become less worried about the machines and more worried about the humans!

I’ve copied some of the responses so far to each of the questions onto the survey’s home page. Where respondents gave permission, I’ve attributed the comments to the people who made them; otherwise I left the comments as “Anon”.

In case anybody has second thoughts about the comments assigned to their names, don’t hesitate to let me know, and I’ll update the page.

In all cases, people who have supplied answers and comments have made some insightful comments. In several cases, the comments have been outstanding. If you read through the content on the survey’s home page, you’ll likely agree with me: there’s much there that is thought-provoking and challenging.

This is your chance to take part

Everyone who believes they have good insights to share is welcome to take part in the survey, and to provide comments explaining their choice of answers.

If you want to jump straight into the questions, here’s the link to the Google Form hosting them. Alternatively, you can immerse yourself beforehand in the discussion sections on the survey’s home page.

There’s a total of 26 questions. It’s up to you how many to answer.

After another few weeks, I’ll design and issue Round 2 of the survey, taking into account:

- Comments and suggestions made by the participants of Round 1

- My own assessment of which questions need more attention – and which new questions deserve to be introduced.

To be clear, the purpose of this survey isn’t to measure the weight of public opinion – nor even the weight of opinion of people who could be considered experts in aspects of AI.

Instead, the survey is intended to support and enhance the public discussion about the transition from today’s AI to the potentially much more disruptive AGI that may emerge in the near future. This will involve a number of rounds (iterations), with participants having the opportunity to update their answers and comments in the light of answers and comments supplied by other participants.

For some questions, a consensus may emerge. For other questions, a division of opinion may become clearer – along, hopefully, with agreement on how these divisions could be bridged by further research and/or experimentation.

As a result of the answers developed to these questions, and the ensuing general discussion, society’s leaders will hopefully become better placed to create and apply systems to govern that transition wisely.

Advice: good, bad, and vital

On that point, the good news is that senior politicians in numerous countries (including the UK’s Prime Minister) are nowadays asking for advice about the future of AI.

The bad news is that a lot of the “advice” being provided, by so-called AI analysts, is frequently shallow, out-dated, misinformed, or self-serving. That’s an alarming state of affairs.

Hence the importance of raising awareness of the key issues and opportunities arising in this fast-changing field. Which is where we, in the London Futurists community, have a vital role to play.

The 4 Cs of Superintelligence

For some helpful background to the questions that feature in the survey, why not find 32 minutes to listen to the latest London Futurists Podcast episode, The 4 Cs of Superintelligence.

The 4 Cs is a framework, developed by London Futurists Podcast co-host Calum Chace, that casts fresh light on the vexing question of possible outcomes of humanity’s interactions with an emerging superintelligent AI. The 4 Cs are Cease, Control, Catastrophe, and Consent.

I won’t say anything more about these four options at the moment, except to issue a small spoiler alert: the conversation between Calum and me expanded into more angles than we initially had in mind, so the episode ends in an unexpected cliff-hanger.

// David W. Wood

Chair, London Futurists